The final published version of this report is now available from the Jisc website

TL;DR: When we think about artificial intelligence (AI) we tend to picture the anthropomorphic robots of classic science fiction stories. In an education context, perhaps it’s a robot teacher gliding silently down a school corridor, some time in the future. But AI is all around us now, often as an invisible addition to apps and services that we use every day. In this report I’ll take a look at how AI can and is being used in education, from recognising and classifying data to generating new content from scratch. I’ll also look at how AI can be cheated and even defeated by a determined adversary, and consider how we can ensure that an ethical approach is taken to using AI in education.

- Artificial intelligence in a nutshell

AI has been in the headlines a lot in the last couple of years – perhaps most notably thanks to the work of Google’s DeepMind division. DeepMind famously produced the world beating Alpha Go AI, and the underlying TensorFlow software used by DeepMind has become very widely adopted after Google made the code ‘open source’. This has already had a virtuous circle effect for Google, with most AI developers cutting their teeth on TensorFlow projects, and the software has quickly become established as the de facto standard for AI.

But AI has actually been around for a lot longer – the precursors of today’s AI techniques date back to research from the 1960s, with the advent of genetic algorithms and neural networks. Three aspects of the way we use technology have changed dramatically in the intervening years: we now have pervasive connectivity to vast cloud computing resources through the Internet, and the major service providers have access to the millions or even billions of data points that are needed to ‘train’ the AI. In parallel, neural network research has dramatically accelerated with the availability of free software like TensorFlow and reference datasets like ImageNet.

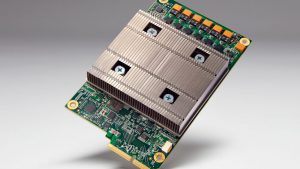

Google Compute Engine Tensor Processing Unit – cloud based neural networking, photo credit Google

- AI for classification – from self-driving cars to Learning Analytics

Google’s DeepMind artificial intelligence beat the (human) world champion at Go by building predictive models based on learning from thousands of previous games. Could a similar approach help teachers, learners and institutions to continuously adapt to improve retention and learning outcomes? Could an AI one day generate a personalised curriculum for learners in a similar way to the generative art produced by Facebook’s Eyescream or Google’s Deep Dream research projects?

With 90% of 16-21 year olds in the UK owning a smartphone, today’s learners are constantly generating a ‘digital exhaust’ of data. It’s not unreasonable to think that some of that data could potentially be used to improve teaching and learning outcomes. But when we start to make predictions based on linking data that the institution holds with learners’ own data, a whole new class of ethical issues arise. Do you know where your data is, and where it’s going?

It’s fascinating to see how AI is used in self-driving cars, and this perhaps gives us some clues as to how it could be used in education. Self-driving cars are typically studded with camera, ultrasonic sensors and LIDAR systems which send out millions of laser beams per second to map the environment around the car. AI is used for everything from recognising street signs such as speed limit warnings to more probabilistic applications such as recognising when a cyclist appears to be gearing up to change lanes. In the self-driving car each of these situations would typically lead to a particular response – take avoiding action, slow down or stop, and so on. The video below gives us an idea of what the self-driving car ‘sees’, using the DarkNet neural network to classify the objects it encounters.

In an education context some of the sensors may well be (CCTV) cameras, but our institutions are already kitted out with a wide range of IT systems that know who is using them, when, and (to an extent) what for. Consider for example the Virtual Learning Environment or Learning Management System, library systems, attendance monitoring systems and so on. At the moment the data held in these systems is typically standalone, and often consists of diagnostic log files that are likely to be deleted without being processed in any way. This digital exhaust could tell us a great deal about how engaged a learner is, and when we start to link datasets together we can potentially build up quite a nuanced picture of when and where learning takes place. This is what we are starting to do now with predictive analytics as part of the Jisc Learning Analytics service, which has been co-created with a community of around 100 institutions and vendors.

Taking this example a little further, the Jisc supported Learnometer startup has built an inexpensive hardware monitoring device to go into classrooms and lecture theatres which monitors a range of environmental parameters from noise and light levels to temperature, humidity and pollution. As we start to look at linking learning outcomes, timetabling systems and Learnometer data, we may well find a correlation between poor learning outcomes and rooms that are simply unsuitable for teaching. One school in Dubai that has been piloting Learnometer has already changed over 160 rooms in response to the data from the system.

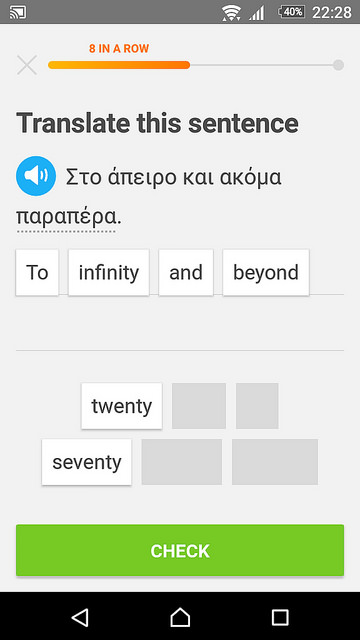

But AI has a wide range of other potential applications in education – perhaps the most notable being adaptive learning, where the curriculum is modified dynamically in response to the learner’s strengths and weaknesses. In this case we could view the AI as a coach or mentor, identifying areas where the student could potentially improve their performance. For example, the AI might give the student additional exercises to complete that would help them to better understand a topic they are struggling with. Already there are a number of commercial systems that use this sort of predictive analytics – such as the DuoLingo language learning app.

Screenshot from DuoLingo – AI powered language learning app, CC BY Flickr user Duncan Hall

Another application of AI that we are starting to see in education is the creation of chat bots – your human staff might only work office hours, Monday to Friday, but your bot can be active 24×7 and helping learners in any time zone. What’s more, it will never take industrial action, and you don’t have to pay it a pension. Most of the applications of chat bots that we have seen so far are in the area of student advice and guidance, but Georgia Tech notably deployed ‘Jill Watson’ an AI bot as a teaching assistant – and it was months before students realised.

- Generative AI – what did your AI make today?

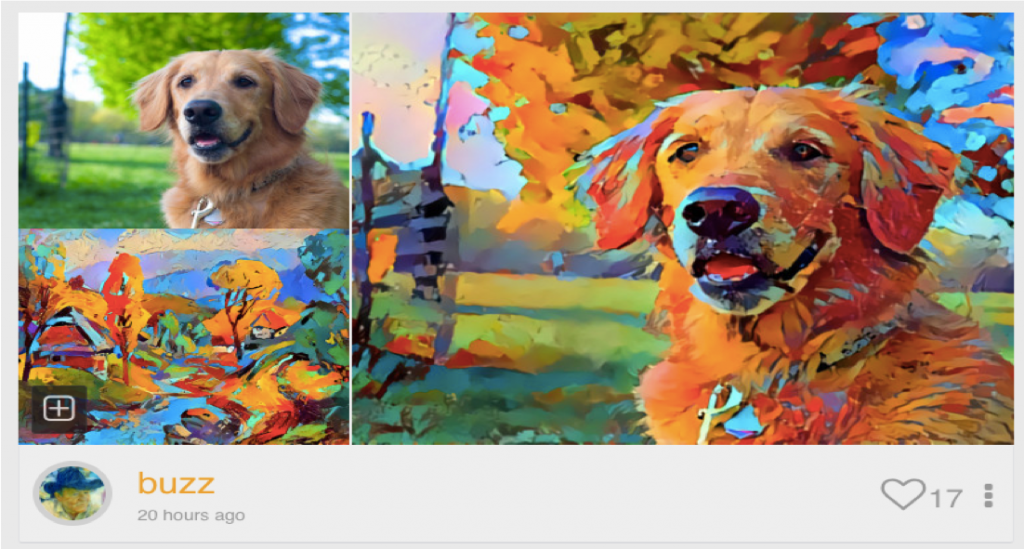

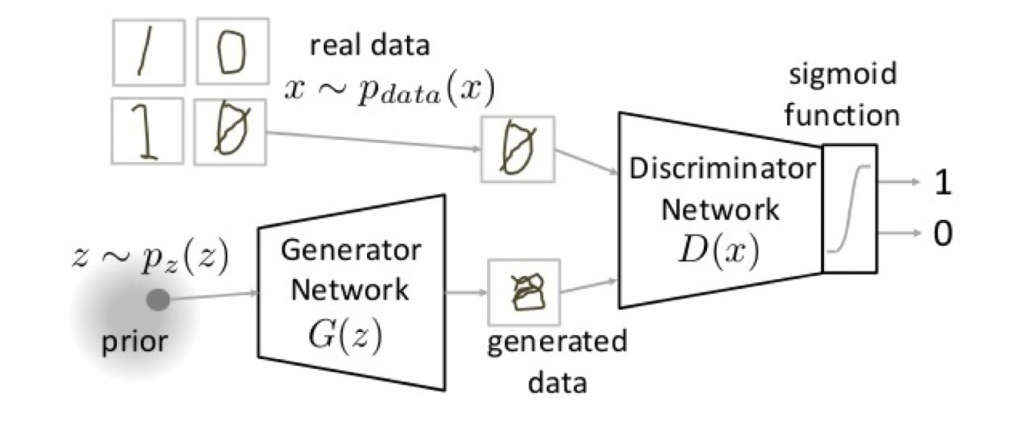

We are very familiar with classification type aspects of AI – such as when Google Photos automatically finds all the pictures you took of a family member, all your pictures of cats and trees, and perhaps even cats stuck up trees. But AIs are getting much smarter, and learning how to create new digital content all by themselves. This ‘generative’ AI generally works by pitting two neural networks against each other – a generator, and a classifier. The generator produces some output based on an initial data source, which could even be white noise, and then passes it to the classifier for scoring. Let’s picture that you are the generator, trying to create a photo realistic picture of a cat – the more cat-like your picture, the higher your score.

This simple feedback mechanism turns out to be highly effective at creating pictures, videos and even text that are a good match for some starting reference point. This artistic style transfer technique has been widely adopted to implement advanced photo filters in social media apps. Microsoft have taken this approach one step further by creating an AI that generates a picture based on text.

Generative AI clearly has a lot of potential in the sort of personalised learning scenarios discussed above – wouldn’t it be great if the lecturer didn’t have to map out all of the possible pathways through the material and devise the reinforcement exercises in each area? However generative AI also raises all sorts of interesting questions about cheating. Rather than simply downloading someone else’s attempt to answer an essay question, a generative AI could adapt their answer to the style of the learner who was attempting to cheat. Perhaps this will lead to an arms race, with both sides deploying ever more advanced AIs to cheat and to detect cheating.

Generative AI is particularly interesting in art and design, where it’s easy to see it simply becoming another tool available to practitioners. We often talk about the need to develop digital skills and digital capability in our institutions and the general population, and this could be a very stark example of an area where some quite deep technical concepts need to be assimilated in order to achieve meaningful results. We could ask ourselves here how far down the rabbit hole a student or teacher might expect to go.

In a few years time it may be commonplace to find art and design students doing Python programming to train a TensorFlow AI model. This might seem unlikely at present, but we should keep in mind that Python is one of the programming languages that today’s schoolchildren will typically encounter as they study Computing. TensorFlow even works reasonably well even on the humble Raspberry Pi computer which has proven popular in classrooms across the world, as I show in the video demo below.

- Adversarial objects – a shock to the system

We tend to assume that the uses people will put technology to are largely benign, if not socially productive in themselves. But that isn’t always the case, as we’ve seen from the plagiarism example above. What happens when someone is actively trying to cheat the AI which controls or monitors some critical institutional process?

This might seem hard to do, but we’ve recently seen some quite alarming examples of small perturbations in data that are enough to trick machine learning models into mis-classifying the data they’re fed with. One particularly notable example is the work of the labsix student team at MIT, which has shown how small peturbations in the inputs to a neural network can lead it to mis-classify objects. Labsix’s examples include a 3D printed turtle identified by the neural network as a rifle, a photo of a tabby cat identified as guacamole, and a baseball that the neural network thinks is an espresso.

Whilst it’s easy to leap to examples of the sort of cheating behaviour we looked at above, adversarial AI inputs could be a goldmine for pranksters, and we should expect a constant stream of sophomore level pranks like MIT’s infamous ‘hacks’. However there is a deeper implication here that we need to keep sight of – processes that are entirely automated are inherently highly vulnerable to adversarial interference, and some element of human interaction is essential in any cases where there are consequences for those involved. Even now we are seeing generative AI being used to create fake photo and video imagery, to implicate innocent victims in crimes, for social shaming, and other nefarious purposes. Imagine a situation where the AI is both judge and jury, and the potential for disaster becomes clear.

- Conclusions

We’ve talked a lot in this report about artificial intelligence, but the truth is that none of these computer programs is remotely intelligent in the way that a human being is. There is no sentience here, even if a digital assistant like Amazon Alexa or Apple’s Siri can on occasion give a passable pretence at this. The computer is simply following a script, and using AI to help it recognise cues that will trigger particular parts of that script.

Like the self-driving car breaking sharply to avoid a cat running out into the road, AIs in education have the potential to be constantly sampling far more data points than any human being ever could. They can also instantly marry linked data from disparate sources to help steer both learners and teachers towards the best possible outcomes. However, as we’ve seen AIs are still quite immature and somewhat vulnerable to bad actors that might want to distort results for nefarious purposes – or simply for laughs.

This is why ethics need to be at the forefront of any projects to use AI in education. We need to be clear what data is being processed, where, and how. And any actions or recommendations made by an AI need to be subject to human review. Even then there is one final issue that we have to face – AIs essentially evolve the computer program that drives them, which can make it hard to identify why an AI made a particular decision. If we could ask the self-driving car why it swerved, the best that it might be able to say is that ‘something 75% catlike jumped out in front of me’. In much more nuanced situations such as teaching and learning we may find that the AI moves in mysterious ways.

Feedback

This is a draft report – we’d love to know what you think of it, so please do leave a comment below, or get in touch. Be sure to read our other horizon scanning reports and keep a look out for new material – we aim to put a new report out every couple of months.

About Jisc

We are a not-for-profit company owned by and serving the HE, FE and skills sectors in the UK. There are around 18 million users of our shared services like the Janet network, eduroam, JiscMail, and our shared data centres. We also do a number of national deals with IT vendors and publishers, such as Amazon, Google, Microsoft, Elsevier and Springer. Our other key activity is to provide advice & guidance to the sector on digital technologies in research and education.

My role at Jisc is to lead a small group which is exploring the potential in research and education of emerging technologies like Virtual and Augmented Reality, machine learning and brain computer interfaces. We help institutions to devise and implement their digital strategies, and to build the evidence base around new technologies.

Another application (probably falling under section 2 of this article) is AI for classification of language / intent. I think I’m right in saying that this is an area where AI is being applied. So in this sense, AI is something that can be applied to things like search, or even things like personal assistants, where greater understanding of user’s input (their intent, context, etc etc) could make for a more beneficial, pleasant, useful outcome for users.